自治代理框架介绍

上一节,我们说下CAMEL框架,其可以通过多个AI进行角色扮演,实现多轮对话,通过这种方式将一个模糊的需求扩展为具体的任务。从此又延伸出了新的概念,自主代理和自治代理,其可以独立的执行任务,并长期执行下去。在之中有几个备受关注的项目,比如AutoGPT和BabyAGI以及HuggingGPT,本章会介绍下这几类项目。

- AutoGPT

其是一个基于OpenAI中GPT-4的代理,其功能是自动链接多个任务,将原本用户输入的大任务,不断的拆分为子任务,然后在框架中一次执行,最终达到目标。

其利用短期记忆来管理上下文,利用互联网进行搜索解决问题,故适合短期的大任务,比如网络搜索并分析。制定业绩计划的制定。

其现阶段仍是一个实验性质的项目,不过在Github上很活跃。

- Baby AGI

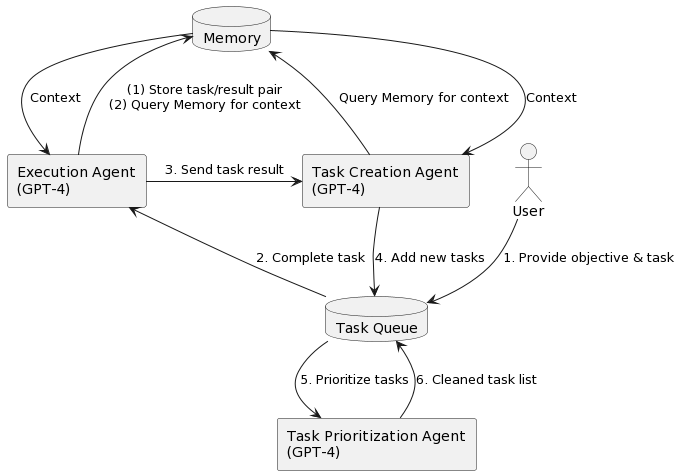

利用OpenAI中GPT-4模型,来理解任务,并根据任务来进行拆分,将子任务确认优先级并执行任务,利用Pinecone向量来存储任务结果,并根据子任务结果来重新排列任务列表。

也就是说,当使用Baby AGI的时候,如果向系统提出一个目标,就会不断的优先考虑需要实现的目标,然后生成目标列表,之后就是不断的从目标列表中拉出优先级第一的任务,连续的执行他们,并根据前面的任务结果和现在的任务列表,创建更多的任务。(这里面,前面任务的执行结果,会被存储到内存或者Pinecone向量数据库)

在上面的循环中,存在着三个代理,分别是

执行代理,负责实际任务的执行,主要是向着OpenAI的API发出提示

任务创建代理,主要是根据上一个任务的结果,任务的整体描述,任务列表等,根据这些参数去创建新的任务列表。

优先级设置代理,对任务列表进行重排序,调用OpenAI确认优先级。返回重新排序之后的新任务列表。

- HuggingGPT

HuggingGPT是一个依赖Hugging Face的代理流程,其执行过程为

任务规划:LLM解析用户请求,生成任务列表

模型选择:LLM根据Hugging Face上专家模型描述,为任务分配适当的模型

任务执行:启动不同的模型,分配并执行任务。

响应:整合不同任务的结果,生成摘要进行返回。

HuggingGPT的爆火是利用了HuggingFace中的多模型能力,提供了可扩展的AI能力。

那么说完了上面三个代理,我们看下BabyAGI的实际用法,

首先是相关的库导入

| # 设置API Key

import os os.environ[“OPENAI_API_KEY”] = ‘Your OpenAI Key’ # 导入所需的库和模块 from collections import deque from typing import Dict, List, Optional, Any from langchain.chains import LLMChain from langchain.prompts import PromptTemplate from langchain.embeddings import OpenAIEmbeddings from langchain.llms import BaseLLM, OpenAI from langchain.vectorstores.base import VectorStore from pydantic import BaseModel, Field from langchain.chains.base import Chain from langchain.vectorstores import FAISS import faiss from langchain.docstore import InMemoryDocstore |

然后是初始化整个模型

| # 定义嵌入模型

embeddings_model = OpenAIEmbeddings() # 初始化向量存储 embedding_size = 1536 index = faiss.IndexFlatL2(embedding_size) vectorstore = FAISS(embeddings_model.embed_query, index, InMemoryDocstore({}), {}) |

然后定义不同的任务生成链

| # 任务生成链

class TaskCreationChain(LLMChain): “””负责生成任务的链””” @classmethod def from_llm(cls, llm: BaseLLM, verbose: bool = True) -> LLMChain: “””从LLM获取响应解析器””” task_creation_template = ( “You are a task creation AI that uses the result of an execution agent” ” to create new tasks with the following objective: {objective},” ” The last completed task has the result: {result}.” ” This result was based on this task description: {task_description}.” ” These are incomplete tasks: {incomplete_tasks}.” ” Based on the result, create new tasks to be completed” ” by the AI system that do not overlap with incomplete tasks.” ” Return the tasks as an array.” ) prompt = PromptTemplate( template=task_creation_template, input_variables=[ “result”, “task_description”, “incomplete_tasks”, “objective”, ], ) return cls(prompt=prompt, llm=llm, verbose=verbose) # 任务优先级链 class TaskPrioritizationChain(LLMChain): “””负责任务优先级排序的链””” @classmethod def from_llm(cls, llm: BaseLLM, verbose: bool = True) -> LLMChain: “””从LLM获取响应解析器””” task_prioritization_template = ( “You are a task prioritization AI tasked with cleaning the formatting of and reprioritizing” ” the following tasks: {task_names}.” ” Consider the ultimate objective of your team: {objective}.” ” Do not remove any tasks. Return the result as a numbered list, like:” ” #. First task” ” #. Second task” ” Start the task list with number {next_task_id}.” ) prompt = PromptTemplate( template=task_prioritization_template, input_variables=[“task_names”, “next_task_id”, “objective”], ) return cls(prompt=prompt, llm=llm, verbose=verbose) # 任务执行链 class ExecutionChain(LLMChain): “””负责执行任务的链””” @classmethod def from_llm(cls, llm: BaseLLM, verbose: bool = True) -> LLMChain: “””从LLM获取响应解析器””” execution_template = ( “You are an AI who performs one task based on the following objective: {objective}.” ” Take into account these previously completed tasks: {context}.” ” Your task: {task}.” ” Response:” ) prompt = PromptTemplate( template=execution_template, input_variables=[“objective”, “context”, “task”], ) return cls(prompt=prompt, llm=llm, verbose=verbose) |

定义了三个链

然后定义一系列的功能函数

| def get_next_task(

task_creation_chain: LLMChain, result: Dict, task_description: str, task_list: List[str], objective: str, ) -> List[Dict]: “””Get the next task.””” incomplete_tasks = “, “.join(task_list) response = task_creation_chain.run( result=result, task_description=task_description, incomplete_tasks=incomplete_tasks, objective=objective, ) new_tasks = response.split(“\n”) return [{“task_name”: task_name} for task_name in new_tasks if task_name.strip()] def prioritize_tasks( task_prioritization_chain: LLMChain, this_task_id: int, task_list: List[Dict], objective: str, ) -> List[Dict]: “””Prioritize tasks.””” task_names = [t[“task_name”] for t in task_list] next_task_id = int(this_task_id) + 1 response = task_prioritization_chain.run( task_names=task_names, next_task_id=next_task_id, objective=objective ) new_tasks = response.split(“\n”) prioritized_task_list = [] for task_string in new_tasks: if not task_string.strip(): continue task_parts = task_string.strip().split(“.”, 1) if len(task_parts) == 2: task_id = task_parts[0].strip() task_name = task_parts[1].strip() prioritized_task_list.append({“task_id”: task_id, “task_name”: task_name}) return prioritized_task_list def _get_top_tasks(vectorstore, query: str, k: int) -> List[str]: “””Get the top k tasks based on the query.””” results = vectorstore.similarity_search_with_score(query, k=k) if not results: return [] sorted_results, _ = zip(*sorted(results, key=lambda x: x[1], reverse=True)) return [str(item.metadata[“task”]) for item in sorted_results] def execute_task( vectorstore, execution_chain: LLMChain, objective: str, task: str, k: int = 5 ) -> str: “””Execute a task.””” context = _get_top_tasks(vectorstore, query=objective, k=k) return execution_chain.run(objective=objective, context=context, task=task) |

最后我们定义一个主类,Baby AGI 主类

| class BabyAGI(Chain, BaseModel):

“””BabyAGI代理的控制器模型””” task_list: deque = Field(default_factory=deque) task_creation_chain: TaskCreationChain = Field(…) task_prioritization_chain: TaskPrioritizationChain = Field(…) execution_chain: ExecutionChain = Field(…) task_id_counter: int = Field(1) vectorstore: VectorStore = Field(init=False) max_iterations: Optional[int] = None class Config: “””Configuration for this pydantic object.””” arbitrary_types_allowed = True def add_task(self, task: Dict): self.task_list.append(task) def print_task_list(self): print(“\033[95m\033[1m” + “\n*****TASK LIST*****\n” + “\033[0m\033[0m”) for t in self.task_list: print(str(t[“task_id”]) + “: ” + t[“task_name”]) def print_next_task(self, task: Dict): print(“\033[92m\033[1m” + “\n*****NEXT TASK*****\n” + “\033[0m\033[0m”) print(str(task[“task_id”]) + “: ” + task[“task_name”]) def print_task_result(self, result: str): print(“\033[93m\033[1m” + “\n*****TASK RESULT*****\n” + “\033[0m\033[0m”) print(result) @property def input_keys(self) -> List[str]: return [“objective”] @property def output_keys(self) -> List[str]: return [] def _call(self, inputs: Dict[str, Any]) -> Dict[str, Any]: “””Run the agent.””” objective = inputs[“objective”] first_task = inputs.get(“first_task”, “Make a todo list”) self.add_task({“task_id”: 1, “task_name”: first_task}) num_iters = 0 while True: if self.task_list: self.print_task_list() # Step 1: Pull the first task task = self.task_list.popleft() self.print_next_task(task) # Step 2: Execute the task result = execute_task( self.vectorstore, self.execution_chain, objective, task[“task_name”] ) this_task_id = int(task[“task_id”]) self.print_task_result(result) # Step 3: Store the result in Pinecone result_id = f”result_{task[‘task_id’]}_{num_iters}” self.vectorstore.add_texts( texts=[result], metadatas=[{“task”: task[“task_name”]}], ids=[result_id], ) # Step 4: Create new tasks and reprioritize task list new_tasks = get_next_task( self.task_creation_chain, result, task[“task_name”], [t[“task_name”] for t in self.task_list], objective, ) for new_task in new_tasks: self.task_id_counter += 1 new_task.update({“task_id”: self.task_id_counter}) self.add_task(new_task) self.task_list = deque( prioritize_tasks( self.task_prioritization_chain, this_task_id, list(self.task_list), objective, ) ) num_iters += 1 if self.max_iterations is not None and num_iters == self.max_iterations: print( “\033[91m\033[1m” + “\n*****TASK ENDING*****\n” + “\033[0m\033[0m” ) break return {} @classmethod def from_llm( cls, llm: BaseLLM, vectorstore: VectorStore, verbose: bool = False, **kwargs ) -> “BabyAGI”: “””Initialize the BabyAGI Controller.””” task_creation_chain = TaskCreationChain.from_llm(llm, verbose=verbose) task_prioritization_chain = TaskPrioritizationChain.from_llm( llm, verbose=verbose ) execution_chain = ExecutionChain.from_llm(llm, verbose=verbose) return cls( task_creation_chain=task_creation_chain, task_prioritization_chain=task_prioritization_chain, execution_chain=execution_chain, vectorstore=vectorstore, **kwargs, ) |

最终我们对主代码进行执行,运行Baby AGI

| # 主执行部分

if __name__ == “__main__”: OBJECTIVE = “分析一下北京市今天的气候情况,写出鲜花储存策略。” llm = OpenAI(temperature=0) verbose = False max_iterations: Optional[int] = 6 baby_agi = BabyAGI.from_llm(llm=llm, vectorstore=vectorstore, verbose=verbose, max_iterations=max_iterations) baby_agi({“objective”: OBJECTIVE}) |

其在执行过程中,会不断的进行循环,首先是TASK LIST然后不断的NEXT TASK,最后汇总为TASK RESULT任务结果。

那么总结一下,我们说了几种新出现的自主代理框架,其不同框架具有不同的特色。

但目标都是针对一个长期目标,进行不间断的自我调用和自我优化。