模型微调3 使用Huggingface Transformers来微调模型

如果我们希望微调角色扮演模型的话,可以考虑使用Huggingface Transformers来进行微调。

本次我们可以使用Huggingface Transformers 以及Meta-Llama-3-8B-Instruct模型进行微调。

那么为了符合模型微调的环境,我们首先需要安装相关依赖

| !pip install transformers==4.37.2

!pip install pandas==1.5.3 !pip install matplotlib==3.8.0 !pip install numpy==1.26.4 !pip install datasets==2.18.0 !pip install jieba==0.42.1 !pip install rouge-chinese==1.0.3 !pip install tqdm==4.66.1 |

之后我们微调会使用SFT进行大模型的微调,SFT会使得大模型更加遵循指令,风格。

再其次需要准备模型微调使用到的数据集

一般来说都是符合Prompt+Response的数据格式

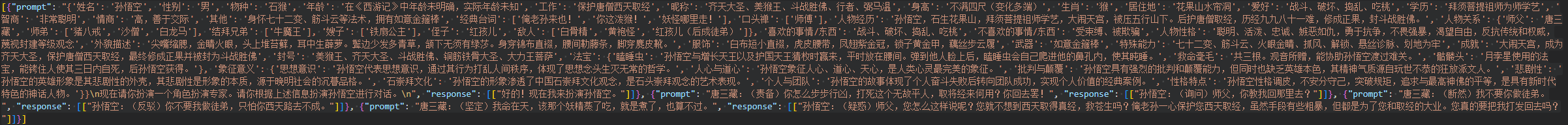

这次我们直接使用CharacterEval开源数据集进行实验。其中包含了详细的人设信息,举个示例

接下来我们需要使用Transformers对Meta-Llama-3进行全量更新

首先假设我们有一个数据集,其中含有1000个上述的对话,包含了不同的角色和其中的对话。

首先我们需要进行划分,将其划分为训练集和评估集

其中遵循三个原则,

1.首先是评估集的数据和训练集的数据来源一致,内容不能重复

2.其次是确保经常使用的人设对应的训练数据要多,方便评测

3.最后是保留一些没有在数据中涉及的人设,用来评测大模型泛化能力。

因此我们可以将数据集按照人设分组,

然后取10个人设单独作为验证集中的数据确保最后一条符合

除此外,对于剩余下来的人设,取10%的数据放入测试集,剩下的90%加入数据集

| import random

import copy import math random.seed(42) all_data = copy.deepcopy(processed_data) # 打乱数据顺序 random.shuffle(all_data) all_data_df = pd.DataFrame(all_data) train_df_list = [] eval_df_list = [] role_name_list = list(role_to_id.keys()) # 取最后10个角色全部放置在验证集中 for role_name in role_name_list[-10:]: role_df = all_data_df[all_data_df[‘role’] == role_name] eval_df_list.append(role_df) for role_name in role_name_list[:-10]: role_df = all_data_df[all_data_df[‘role’] == role_name] # 取90%的数据作为训练集 train_role_num = math.ceil(len(role_df) * 0.9) eval_role_num = len(role_df) – train_role_num train_role_df = role_df.iloc[:train_role_num] train_df_list.append(train_role_df) eval_role_df = role_df.iloc[train_role_num:] eval_df_list.append(eval_role_df) train_df = pd.concat(train_df_list) eval_df = pd.concat(eval_df_list) |

之后就是训练操作了

其中核心是训练的函数

| def train(

# model/data params base_model: str = “./model/Meta-Llama-3-8B-Instruct”, max_seq_len: int = 8192, train_data_path: str = “./data/train_ds.jsonl”, val_data_path: str = “./data/eval_ds.jsonl”, output_dir: str = “./output/role_model/”, micro_batch_size: int = 8, gradient_accumulation_steps: int = 1, num_epochs: int = 3, learning_rate: float = 3e-5, val_set_size: int = 10, ): device_map = “auto” # 加载模型 model = AutoModelForCausalLM.from_pretrained( base_model, device_map=device_map, attn_implementation=”flash_attention_2″ ) tokenizer = AutoTokenizer.from_pretrained( base_model ) tokenizer.pad_token_id = 0 if train_data_path.endswith(“.json”) or train_data_path.endswith(“.jsonl”): train_ds = load_dataset(“json”, data_files=train_data_path) else: train_ds = load_dataset(train_data_path) if val_data_path is not None: if val_data_path.endswith(“.json”) or val_data_path.endswith(“.jsonl”): val_ds = load_dataset(“json”, data_files=val_data_path) else: val_ds = load_dataset(val_data_path) train_ds = train_ds[“train”] val_ds = val_ds[“train”] else: #split thte data to train/val set train_val = trian_data[“train”].train_test_split( test_size=val_set_size, shuffle=False, seed=42 ) train_ds = train_val[“train”] val_ds = train_val[“test”] train_data = train_ds.map(preprocess, fn_kwargs={‘tokenizer’: tokenizer, ‘max_length’: max_seq_len, ‘template’: LLAMA3_TEMPLATE}, batched=True, num_proc=16, remove_columns=[“src”, “tgt”] ) val_data = val_ds.map(preprocess, fn_kwargs={‘tokenizer’: tokenizer, ‘max_length’: max_seq_len, ‘template’: LLAMA3_TEMPLATE}, batched=True, num_proc=16, remove_columns=[“src”, “tgt”] ) trainer = transformers.Trainer( model=model, train_dataset=train_data, eval_dataset=val_data, args=transformers.TrainingArguments( per_device_train_batch_size=micro_batch_size, gradient_accumulation_steps=gradient_accumulation_steps, warmup_steps=50, num_train_epochs=num_epochs, learning_rate=learning_rate, logging_steps=10, optim=”adamw_torch”, fp16=True, evaluation_strategy=”steps”, save_strategy=”steps”, eval_steps=500, save_steps=1000, output_dir=output_dir, save_total_limit=3 ), data_collator=transformers.DataCollatorForSeq2Seq( tokenizer, pad_to_multiple_of=8, return_tensors=”pt”, padding=True ), ) trainer.train() model.save_pretrained(output_dir, max_shard_size=”2GB”) # 保存模型,且最大分片大小为 2GB tokenizer.save_pretrained(output_dir) # 保存 tokenizer |

除此外就是数据预处理,需要处理的和模型之前训练时使用的一致

| from typing import List

import copy import torch import transformers from datasets import load_dataset from transformers import AutoTokenizer, AutoModelForCausalLM LLAMA3_TEMPLATE = “<|start_header_id|>user<|end_header_id|>\n\n{}<|eot_id|><|start_header_id|>assistant<|end_header_id|>\n\n” def preprocess(example, tokenizer, max_length, template=LLAMA3_TEMPLATE): “”” Preprocess a dialogue dataset into the format required by model. “”” srcs = example[‘src’] tgts = example[‘tgt’] result_inputs = [] result_labels = [] result_attention_mask = [] for idx, (questions, answers) in enumerate(zip(srcs, tgts)): utterances = [] for i, (src, tgt) in enumerate(zip(questions, answers)): if i == 0: utterances.append(‘<|begin_of_text|>’ + template.format(src)) else: utterances.append(template.format(src)) utterances.append(tgt) utterances_ids = tokenizer(utterances, add_special_tokens=False, max_length=max_length, truncation=True).input_ids input_ids = [] label = [] # 用于对input进行mask,只计算target部分的loss for i, utterances_id in enumerate(utterances_ids): if i % 2 == 0: # instruction input_ids += utterances_id # 对instruction对应的label进行mask label += ([-100] * len(utterances_id)) else: # response input_ids += (utterances_id + [tokenizer.convert_tokens_to_ids(“<|eot_id|>”)]) label += (utterances_id + [tokenizer.convert_tokens_to_ids(“<|eot_id|>”)]) assert len(input_ids) == len(label) # 对长度进行截断 input_ids = input_ids[:max_length] label = label[:max_length] attention_mask = [1] * len(input_ids) result_inputs.append(input_ids) result_labels.append(label) result_attention_mask.append(attention_mask) return dict(input_ids=result_inputs, labels=result_labels, attention_mask=result_attention_mask) |

最后调用train函数

| train(

# model/data params base_model = “/mnt/cfs_bj/big_model/models/meta-llama/Meta-Llama-3-8B-Instruct/”, train_data_path= “./data/train_ds.jsonl”, val_data_path = “./data/eval_ds.jsonl”, output_dir = “./output/role_model/”, micro_batch_size = 2, gradient_accumulation_steps = 1, num_epochs = 3, learning_rate = 3e-5 ) |

训练之后的模型需要进行人工评测和自动化评测两个部分。

其中人工评测的部分,采用打分制

以4-0为不同的回答打分。