1. 我们为什么需要Ingress

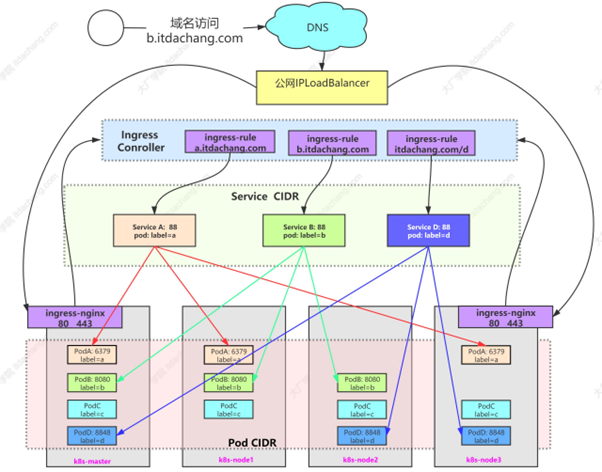

我们先来看一张图

在最下层,我们有着最底层的Pod网络

也是我们在K8S启动的时候设置的Pod CIDR

这一层是彼此互通的

上一层,我们抽出来一层Service层,负责管理实际的Pod,也就是实际的服务,但是本质上还是为了服务集群内的网络通信,让集群内有一个更高层的网络互通

虽然根据上一次我们说Service时候,表示了Service可以通过NodePort方式来对外暴露端口,但是性能低下并不安全

而且,Service往往管理的是一个微服务,而我们需要将多个微服务聚合起来,成为一个服务集群,进行负载均衡,限流等

这时候,就需要推出更上一层的网络管理对象

负责将流量分发给不同的Service对象,也就是Ingress

公开了从外部访问到集群内部的HTTP和HTTPS的路由,然后在收到流量之后,根据内部配置的路由规则,来转发到对应的Service中

从而做到上面的Ingress层

至于更上层的LoadBalancer和DNS等就是公网上的配置,我们先不说了

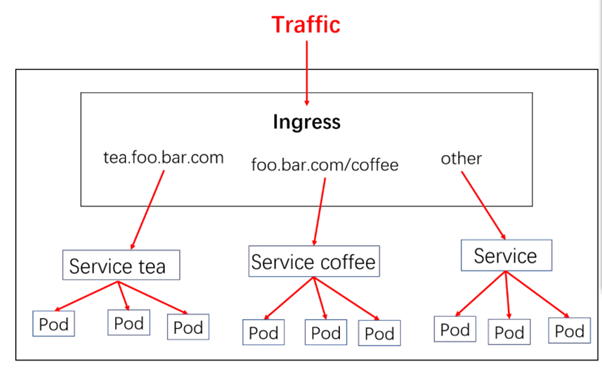

简化版的图如下

这就是Ingress的出现情况

那么说完了Ingress的出现渊源,具体的Ingress在K8S中如何实现的呢?

常见的Ingress实现是Nginx

而Nginx和Ingress的结合在K8S中有两种,分别是Ingress nginx和nginx Ingress

Nginx Ingress是nginx 官方做的,分为了开源版和收费版,文档地址如下

https://www.nginx.com/products/nginx-ingress-controller

但是由于收费版的问题,所以不建议使用,

而ingress nginx是k8s官方做的,所以应用更加广泛,文档地址如下

https://kubernetes.github.io/ingress-nginx/examples/auth/basic/

在ingress nginx的官方文档中,支持的部署如下

大部分都是云厂商部署方式,而我们直接查看最后的Bare metal clusters

裸金属部署方式

官方给出的指令直接是

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.1.1/deploy/static/provider/baremetal/deploy.yaml

我们先拆解看下这个文件

首先是一个namespaces

| apiVersion: v1

kind: Namespace metadata: name: ingress-nginx labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx |

然后创建了对应的ServiceAccount ClusterRole ClusterRoleBinding

| apiVersion: v1

kind: ServiceAccount metadata: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx automountServiceAccountToken: true apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm name: ingress-nginx rules: – apiGroups: – ” resources: – configmaps – endpoints – nodes – pods – secrets – namespaces verbs: – list – watch – apiGroups: – ” resources: – nodes verbs: – get – apiGroups: – ” resources: – services verbs: – get – list – watch – apiGroups: – networking.k8s.io resources: – ingresses verbs: – get – list – watch – apiGroups: – ” resources: – events verbs: – create – patch – apiGroups: – networking.k8s.io resources: – ingresses/status verbs: – update – apiGroups: – networking.k8s.io resources: – ingressclasses verbs: – get – list – watch — # Source: ingress-nginx/templates/clusterrolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm name: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: ingress-nginx subjects: – kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx — # Source: ingress-nginx/templates/controller-role.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx rules: – apiGroups: – ” resources: – namespaces verbs: – get – apiGroups: – ” resources: – configmaps – pods – secrets – endpoints verbs: – get – list – watch – apiGroups: – ” resources: – services verbs: – get – list – watch – apiGroups: – networking.k8s.io resources: – ingresses verbs: – get – list – watch – apiGroups: – networking.k8s.io resources: – ingresses/status verbs: – update – apiGroups: – networking.k8s.io resources: – ingressclasses verbs: – get – list – watch – apiGroups: – ” resources: – configmaps resourceNames: – ingress-controller-leader verbs: – get – update – apiGroups: – ” resources: – configmaps verbs: – create – apiGroups: – ” resources: – events verbs: – create – patch — # Source: ingress-nginx/templates/controller-rolebinding.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx namespace: ingress-nginx roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: ingress-nginx subjects: – kind: ServiceAccount name: ingress-nginx namespace: ingress-nginx — |

上面这些基本不需要进行修改,无论是namespace还是RBAC模块,其中的重点主要在于

| # Source: ingress-nginx/templates/controller-service.yaml

apiVersion: v1 kind: Service metadata: annotations: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: type: NodePort ipFamilyPolicy: SingleStack ipFamilies: – IPv4 ports: – name: http port: 80 protocol: TCP targetPort: http appProtocol: http – name: https port: 443 protocol: TCP targetPort: https appProtocol: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller # Source: ingress-nginx/templates/controller-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller spec: dnsPolicy: ClusterFirst containers: – name: controller image: k8s.gcr.io/ingress-nginx/controller:v1.1.1@sha256:0bc88eb15f9e7f84e8e56c14fa5735aaa488b840983f87bd79b1054190e660de imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: – /wait-shutdown args: – /nginx-ingress-controller – –election-id=ingress-controller-leader – –controller-class=k8s.io/ingress-nginx – –configmap=$(POD_NAMESPACE)/ingress-nginx-controller – –validating-webhook=:8443 – –validating-webhook-certificate=/usr/local/certificates/cert – –validating-webhook-key=/usr/local/certificates/key securityContext: capabilities: drop: – ALL add: – NET_BIND_SERVICE runAsUser: 101 allowPrivilegeEscalation: true env: – name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name – name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace – name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ports: – name: http containerPort: 80 protocol: TCP – name: https containerPort: 443 protocol: TCP – name: webhook containerPort: 8443 protocol: TCP volumeMounts: – name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: – name: webhook-cert secret: secretName: ingress-nginx-admission — |

声明了一个Service,类型为NodePort,然后将80和443端口随机暴露在主机上的端口,方便外网访问

不过这种方式就是随机端口了,我们更希望可以使用固定的主机80和443端口访问

这种部署方式,在官网上也有提及

https://kubernetes.github.io/ingress-nginx/deploy/baremetal/

参考Via the host network小节

如果希望使用固定主机端口访问,则可以考虑将Pod的网络和主机共用一个网络环境

并且将Deployment修改为DeamonSet

主要修改如下,首先是Service中,设置type为Cluster IP

| apiVersion: v1

kind: Service metadata: annotations: labels: helm.sh/chart: ingress-nginx-3.30.0 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 0.46.0 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: type: ClusterIP ## 改为clusterIP ports: – name: http port: 80 protocol: TCP targetPort: http – name: https port: 443 protocol: TCP targetPort: https selector: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller — |

| # Source: ingress-nginx/templates/controller-deployment.yaml

apiVersion: apps/v1 kind: DaemonSet #改ClusterIP为DaemonSet metadata: labels: helm.sh/chart: ingress-nginx-4.0.15 app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/version: 1.1.1 app.kubernetes.io/managed-by: Helm app.kubernetes.io/component: controller name: ingress-nginx-controller namespace: ingress-nginx spec: selector: matchLabels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller revisionHistoryLimit: 10 minReadySeconds: 0 template: metadata: labels: app.kubernetes.io/name: ingress-nginx app.kubernetes.io/instance: ingress-nginx app.kubernetes.io/component: controller spec: dnsPolicy: ClusterFirstWithHostNet #改为主机网络 hostNetwork: true #设置使用主机的网络 containers: – name: controller image: k8s.gcr.io/ingress-nginx/controller:v1.1.1@sha256:0bc88eb15f9e7f84e8e56c14fa5735aaa488b840983f87bd79b1054190e660de imagePullPolicy: IfNotPresent lifecycle: preStop: exec: command: – /wait-shutdown args: – /nginx-ingress-controller – –election-id=ingress-controller-leader – –controller-class=k8s.io/ingress-nginx – –configmap=$(POD_NAMESPACE)/ingress-nginx-controller – –validating-webhook=:8443 – –validating-webhook-certificate=/usr/local/certificates/cert – –validating-webhook-key=/usr/local/certificates/key securityContext: capabilities: drop: – ALL add: – NET_BIND_SERVICE runAsUser: 101 allowPrivilegeEscalation: true env: – name: POD_NAME valueFrom: fieldRef: fieldPath: metadata.name – name: POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace – name: LD_PRELOAD value: /usr/local/lib/libmimalloc.so livenessProbe: failureThreshold: 5 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 readinessProbe: failureThreshold: 3 httpGet: path: /healthz port: 10254 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 1 ports: – name: http containerPort: 80 protocol: TCP – name: https containerPort: 443 protocol: TCP – name: webhook containerPort: 8443 protocol: TCP volumeMounts: – name: webhook-cert mountPath: /usr/local/certificates/ readOnly: true resources: requests: cpu: 100m memory: 90Mi nodeSelector: kubernetes.io/os: linux serviceAccountName: ingress-nginx terminationGracePeriodSeconds: 300 volumes: – name: webhook-cert secret: secretName: ingress-nginx-admission — |

接下来直接部署即可

然后我们说下Ingress的基本使用

一个默认的yaml文件如下

| # https://kubernetes.io/docs/concepts/services-networking/ingress/#the-ingress-resource

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: IngressName namespace: default spec: rules: – host: foo.bar.com http: paths: – path: / pathType: Prefix backend: service: name: my-nginx port: number: 80 |

在sepc中,我们声明了一个host

这个host和是来源网址,和nginx中nginx.servername配置类似

然后在里面,我们设置了http的path,以及pathType

这个PathType的类型描述如下

pathType <string>

PathType determines the interpretation of the Path matching. PathType can

be one of the following values: * Exact: Matches the URL path exactly. *

Prefix: Matches based on a URL path prefix split by ‘/’. Matching is done

on a path element by element basis. A path element refers is the list of

labels in the path split by the ‘/’ separator. A request is a match for

path p if every p is an element-wise prefix of p of the request path. Note

that if the last element of the path is a substring of the last element in

request path, it is not a match (e.g. /foo/bar matches /foo/bar/baz, but

does not match /foo/barbaz). * ImplementationSpecific: Interpretation of

the Path matching is up to the IngressClass. Implementations can treat this

as a separate PathType or treat it identically to Prefix or Exact path

types. Implementations are required to support all path types.

可选的有 prefix 前缀匹配

Exact 精确匹配, Implementations 自定义匹配,或者说交给Ingress进行控制

其次就是声明了service,以及port

声明了这个Ingress之后,就会交给Ingress-Controller进行同步,同步到所有部署的Nginx上

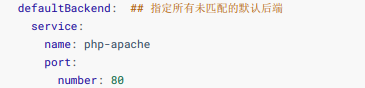

然后默认的后端这个配置项

我们可以在spec指定defaultBackend来匹配所有未指定的路由

然后看起来Ingress的配置项基本就这么多,但实际上,Ingress的配置被隐藏起来了,Ingress更多的配置支持交给了Ingress Controller控制

在Ingress Controller中,提供了两种配置方式,分别是全局配置和单Ingress配置

对于全局配置,官方给出的文档如下

https://kubernetes.github.io/ingress-nginx/user-guide/nginx-configuration/configmap/

在第一项中,就存在着所有的配置项的选择

直接查询配置Ingress-Controller命名空间下的ingress-nginx-controller 的configmap,从里面进行相关的配置

比如,加入几个Header

Data:

X-Different-Name: “true”

X-Request-Start: t=${msec}

其次是关于单Ingress 配置

这个配置实现是利用了Ingress中配置的Annotation

比如我们拿限速来进行测试

Annotations – NGINX Ingress Controller (kubernetes.github.io)

在Ingress的配置中声明

nginx.ingress.kubernetes.io/limit-rpm: “5”

设置了每秒只能请求5次,超过就返回503

| apiVersion: networking.k8s.io/v1

kind: Ingress metadata: name: ingress-222333 namespace: default annotations: nginx.ingress.kubernetes.io/limit-rps: 5 spec: defaultBackend: ## 只要未指定的映射路径 service: name: php-apache port: number: 80 rules: – host: it666.com http: paths: – path: /bbbbb pathType: Prefix backend: service: name: cluster-service-222 port: number: 80 |

除了这个功能,我们再举几个注解修改Ingress的例子

比如配置会话的亲和性

这就不再是在Service中声明Session Affinity了

而是直接在Ingress中声明,只需要在Annotation中声明如下注解即可

nginx.ingress.kubernetes.io/affinity value这里只支持配置cookie

这样就可以使用session亲和了

默认是在前端创建一个名为 INGRESSCOOKIES的cookie

如果对cookie名称不满意,可以通过

nginx.ingress.kubernetes.io/session-cookie-name来进行注解修改

Annotations:

affinity: cookie

session-cookie-name: INGRESSCOOKIE

session-cookie-expires: 172800

session-cookie-max-age: 172800

还有就是在我们前后端分离的时候,经常使用的功能,路径重写

前段使用/来进行请求拦截

而需要请求后端的,则是发/api来进行请求服务,但是后端也是按照/来实现的接口,所以需要由Ingress来进行自动截串

| apiVersion: networking.k8s.io/v1

kind: Ingress metadata: annotations: nginx.ingress.kubernetes.io/rewrite-target: /$2 name: rewrite namespace: default spec: ingressClassName: nginx rules: – host: rewrite.bar.com http: paths: – path: /something(/|$)(.*) pathType: Prefix backend: service: name: http-svc port: number: 80 |

这样上面nginx.ingress.kubernetes.io/rewrite-target中我们声明了重写为/$2

这是利用了下面paths中path正则表达式的占位符

表示为了/$2,利用这个yaml apply之后

· rewrite.bar.com/something rewrites to rewrite.bar.com/

· rewrite.bar.com/something/ rewrites to rewrite.bar.com/

· rewrite.bar.com/something/new rewrites to rewrite.bar.com/new

接下来我们说下,Ingress如何进行配置SSL及Ingress上的金丝雀部署

关于开启Ingress的SSL,本身也不难,因为Https的开启需要配置对应的证书.key及.cert文件即可

而这两者,正好在K8S上可以体现为Secret文件

如果是在云上,可以直接申请证书,相关云上部署流程就不赘述了

如果是本地自我测试,则可以考虑生成一个不安全的证书

openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout ${KEY_FILE:tls.key} -out ${CERT_FILE:tls.cert} -subj “/CN=${HOST:testSelf.com}/O=${HOST: testSelf.com}”

然后在K8S上创建一个Secret对象

Kubectl create secret ssltest ${CERT_NAME:ssl-tls} –key ${KEY_FILE:tls.key} — cert ${CERT_FILE:tls.cert}

之后在Ingress上配置使用证书名

| # https://kubernetes.io/docs/concepts/services-networking/ingress/#tls

apiVersion: v1 kind: Secret metadata: name: tls-test-secret namespace: default type: kubernetes.io/tls # The TLS secret must contain keys named ‘tls.crt’ and ‘tls.key’ that contain the certificate and private key to use for TLS. data: tls.crt: tls.crt tls.key: tls.key — apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: tls-example-ingress namespace: default spec: tls: – hosts: – foo.bar.com secretName: tls-test-secret rules: – host: foo.bar.com http: paths: – path: / pathType: Prefix backend: service: name: my-nginx port: number: 80 |

配置完成之后,访问会使用HTTPS

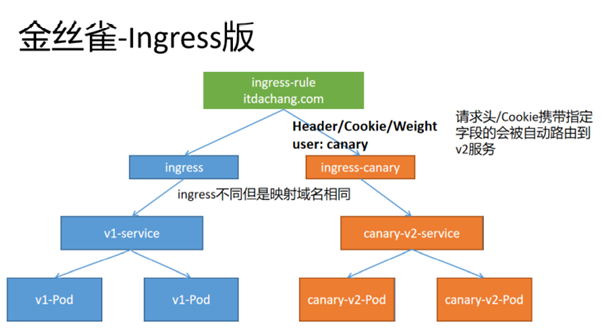

而K8S中Ingress的Canary

其实本质上,就是部署两个版本的Service,利用Ingress配置规则来控制访问不同版本的Service

在K8S中,我们看下Ingress如何实现的

首先需要部署两个Service,对应的代表我们两个不同的版本

| apiVersion: v1

kind: Service metadata: name: ingress-canary-01 namespace: default spec: selector: app: nginx-v1 type: ClusterIP sessionAffinity: None sessionAffinityConfig: clientIP: timeoutSeconds: 10800 ports: – name: nginx protocol: TCP port: 80 targetPort: 80 #———————————– apiVersion: v1 kind: Service metadata: name: ingress-canary-02 namespace: default spec: selector: app: nginx-v2 type: ClusterIP sessionAffinity: None sessionAffinityConfig: clientIP: timeoutSeconds: 10800 ports: – name: nginx protocol: TCP port: 80 targetPort: 80 |

其次我们先假设存在一个老版本V1的Ingress

| # https://kubernetes.io/docs/concepts/services-networking/ingress/#the-ingress-resource

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: IngressName namespace: default spec: rules: – host: foo.bar.com http: paths: – path: / pathType: Prefix backend: service: name: nginx-v1 port: number: 80 |

其次是一个Canary的Ingress

对于Canary的Ingress

| # https://kubernetes.io/docs/concepts/services-networking/ingress/#the-ingress-resource

apiVersion: networking.k8s.io/v1 kind: Ingress metadata: name: IngressName namespace: default annotations: nginx.ingress.kubernetes.io/canary: “true” #开启canary nginx.ingress.kubernetes.io/canary-by-header: “use-version” #配置请求头,默认值为Never或者always nginx.ingress.kubernetes.io/canary-by-header-value: “v2” #当请求头的值为设置的时候,才会跳转canary nginx.ingress.kubernetes.io/canary-by-cookie: “use-version” #配置cookie中设置的key,值为Never或者always nginx.ingress.kubernetes.io/canary-weight: “50” #权重比,多少用户会到金丝雀版本 spec: rules: – host: foo.bar.com http: paths: – path: / pathType: Prefix backend: service: name: nginx-v2 port: number: 80 |

一般来说是不允许两个Ingress监听同一path的

但是我们声明了canary为true,说明这是金丝雀新版本

其次下面的配置是如何访问到canary版本

是根据配置了header,还是cookie,还是权重随机访问

优先级为

canary-by-header -> canary-by-cookie -> canary-weight

如此便可以在调用接口的时候设置是否访问新版本

知道测试完成后将canary设置为之前的Ingress的管辖Service

Canary Ingress下线